DefendingAIArt is a subreddit run by mod “Trippy-Worlds,” who also runs the debate sister subreddit AIWars. Some poking around made clear that AIWars is perfectly fine with having overt Nazis around, for example a guy with heil hitler in his name who accuses others of lying because they are “spiritually jewish.” So we’re off to a great start.

the first thing that drew my eye was this post from a would be employer:

not really clear what the title means, but this person seems to have had a string of encounters with the most based artists of all time.

also claims to have been called “racial and gender slurs” for using ai art and that he was “kicked out of 20 groups” and some other things. idk what to tell this guy, it legitimately does suck that wealthy people have the money to pay for lots of art and the rest of us don’t

I really enjoyed browsing around this subreddit, and a big part of that was seeing how much the stigma around AI gets to people who want to use it. pouring contempt on this stuff is good for the world

the above guy would like to know what combination of buttons to press to counter the “that just sounds like stealing from artists” attack. a commenter leaps in to help and immediately impales himself:

you hate to see it. another commenter points out that well … maybe these people just aren’t your friends

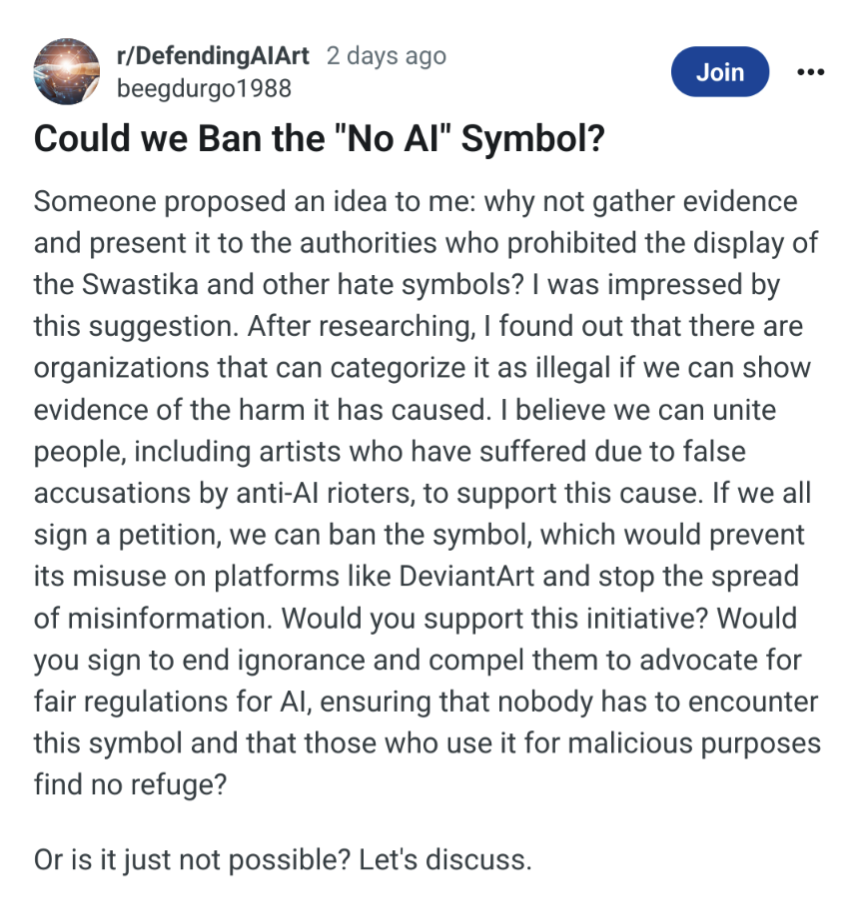

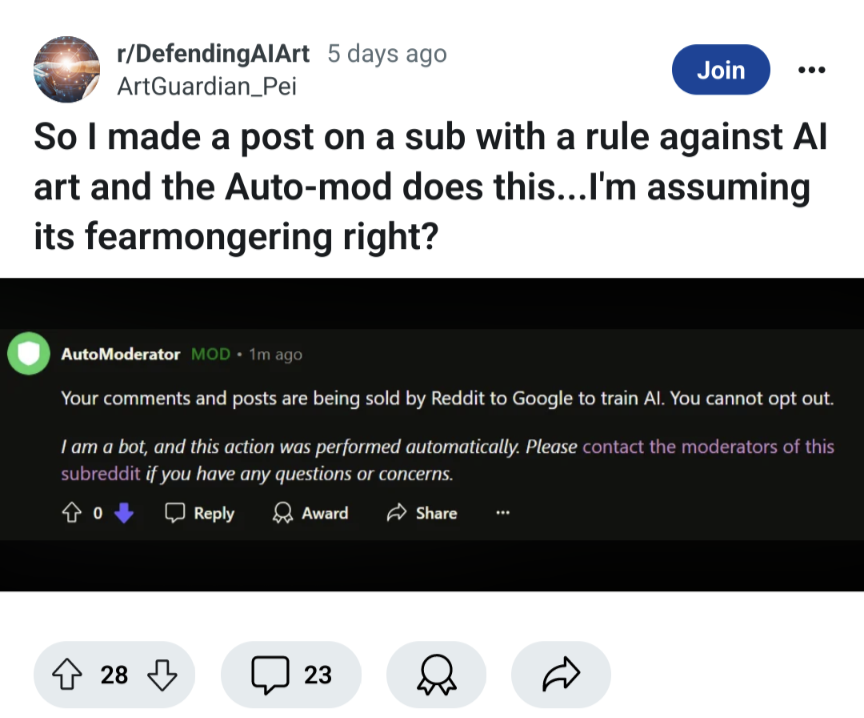

to close out, an example of fearmongering:

Yep, exactly the ones I was thinking about. They lobbied over there a while back with “prominent supporters” like Yud and Hinton. Luckily the general consensus was that they were full of shit, so the mod shut it down.

“rat”/“rats” is short for “rationalists”, which is not to be confused for the english meaning of the word but instead is a reference to the capital-R Rationalists: a de facto cult that follow the teachings originated from their sage creep-in-lead Thinking-Really-Hard non-messiah eliezer yudkowsky, centered around his blog-and-forum LessWrong (a place named in remarkable antithesis to how frequently you can find wrong things there)

one of the key things believed/feared/waffled-on-voluminously by the rationalist community is us mere mortals tampering with computers is going to lead to creating a machine superintelligence and that if we’re not super duper nice to it in advance of it existing then the big bad AI is going to kill us all (because it can make chips out of us, or whatever). for not having been nice enough to it. (they call this “alignment”, as in “it should be aligned with human values”)

you can check the sidebar in the sister community (sneerclub) for some more references, and do some reading up around the TESCREAL bundle (as named by Timnit Gebru)

advance warning: these people are real fuckin’ dumb, and you will learn some very depressing shit

you can check the sidebar in the sister community

Will do, thanks for the detailed answer.

Just from the top of my head since you mention rationalist, does it have something to do with community like star slate codex?

I stumbled upon them many moons ago because someone linked an article posted there about the nuances of neuter-ing feral cats which were written by actual vet iirc, but the rest of what written there horrifies me.